需要首先补充说明的是:InnoDB Buffer Pool 维护这3种列表:LRU list,Flush list,Free list

补充:free 类型的 page,一定位于 buf pool 的 free 链表中。clean,dirty 两种类型的 page,一定位于 buf pool 的 LRU 链表中;dirty page 还位于 buf pool 的 flush 链表中。

LRU list:

LRU List 按照功能被划分为两部分:LRU_young 与 LRU_old,默认情况下, LRU_old 为链表长度的 3/8。页面读取时(get/read ahead),首先链入 LRU_old 的头部。页面第一次访问(read/write),从 LRU_old 链表移动到 LRU_young的头部(整个 LRU 链表头)。这种策略可将预读的页先保存于LRU_old中,因为预读的页不一定会被访问,所以不应当直接放到LRU最前面。

Flush list:

flush list 中的 dirty page,按照 page的 oldest_modificattion 时间排序,oldest_modification 越大,说明 page 修改的时间越晚,就排在 flush 链表的头部;oldest_modification 越小,说明 page 修改的时间越早,就排在 flush链表的尾部。当 InnoDB 进行 flush list 的 flush 操作时,从 flush list 链表的尾部开始,写出足够数量的 dirty pages,推进 Checkpoint 点,保证系统的恢复时间。

Free list:

维护着 free page 信息。

In this blog post, we’ll discuss how to use multi-threaded LRU flushing to prevent bottlenecks in MySQL.

在这篇博文中,我们将讨论如何使用多线程LRU刷新来防止MySQL中的性能瓶颈。

In the previous post, we saw that InnoDB 5.7 performs a lot of single-page LRU flushes, which in turn are serialized by the shared doublewrite buffer. Based on our 5.6 experience we have decided to attack the single-page flush issue first.

在 上一篇 文章中,我们看到 InnoDB 5.7 执行了大量的单页LRU刷新(single-page LRU flushes),这些单页刷新又被共享的双写缓冲区序列化。根据在 5.6 版本的经验,我们决定先从“单页刷新”这个问题入手。

Let’s start with describing a single-page flush. If the working set of a database instance is bigger than the available buffer pool, existing data pages will have to be evicted or flushed (and then evicted) to make room for queries reading in new pages. InnoDB tries to anticipate this by maintaining a list of free pages per buffer pool instance; these are the pages that can be immediately used for placing the newly-read data pages. The target length of the free page list is governed by the innodb_lru_scan_depth parameter, and the cleaner threads are tasked with refilling this list by performing LRU batch flushing. If for some reason the free page demand exceeds the cleaner thread flushing capability, the server might find itself with an empty free list. In an attempt to not stall the query thread asking for a free page, it will then execute a single-page LRU flush ( buf_LRU_get_free_block calling buf_flush_single_page_from_LRU in the source code), which is performed in the context of the query thread itself.

我们先来试着描述一下单页刷新。如果数据库实例的工作集大于可用的缓冲池,缓冲池中现有数据页将被移除或刷盘(然后移除),以便给新的查询块腾出空间。InnoDB尝试通过维护每个缓冲池实例的空闲页列表来预测这一点;;这些是可以立即用于放置新读取的数据页的页面。空闲页面列表的长度由innodb_lru_scan_depth参数控制,且清洁线程的任务是通过执行LRU批量刷新来重新填充此列表(即生成空闲页面列表)。如果由于某些原因,对空闲页面的需求超过了清洁线程刷盘的能力,服务器可能会自己去找空的空闲列表。为了不拖延查询线程请求空闲页面,它将在查询线程本身的上下文中执行单页LRU刷新(buf_LRU_get_free_block 调用源代码中的buf_flush_single_page_from_LRU)。

The problem with this flushing mode is that it will iterate over the LRU list of a buffer pool instance, while holding the buffer pool mutex in InnoDB (or the finer-grained LRU list mutex in XtraDB). Thus, a server whose cleaner threads are not able to keep up with the LRU flushing demand will have further increased mutex pressure – which can further contribute to the cleaner thread troubles. Finally, once the single-page flusher finds a page to flush it might have trouble in getting a free doublewrite buffer slot (as shown previously). That suggested to us that single-page LRU flushes are never a good idea. The flame graph below demonstrates this:

这种刷新模式的问题是,它会遍历缓冲池实例的LRU列表,同时将保持着InnoDB缓冲池互斥(或XtraDB中更细粒度的LRU列表互斥)。因此,清洁线程不能跟上LRU刷新需求的服务器将进一步增加互斥压力——这可能进一步导致清洁线程的故障。最后,一旦单页刷新操作找到可以刷新的页(page),在获取一个空闲的双写缓冲槽时,可能会遇到麻烦(如前所示)。这就告诉我们,单页LRU刷新从来都不是一个好办法。下面的火焰图表明了这一点:

Note how a big part of the server run time is attributed to a flame rooted at JOIN::optimize, whose run time in turn is almost fully taken by buf_dblwr_write_single_page in two branches.

请注意,服务器运行的大部分时间用于基于JOIN :: optimize的火焰,其运行时间几乎完全被 buf_dblwr_write_single_page 的两个分支占据。

The easiest way not to avoid a single-page flush is, well, simply not to do it! Wait until a cleaner thread finally provides some free pages for the query thread to use. This is what we did in XtraDB 5.6 with the innodb_empty_free_list_algorithm server option (which has a “backoff” default). This is also present in XtraDB 5.7, and resolves the issues of increased contentions for the buffer pool (LRU list) mutex and doublewrite buffer single-page flush slots. This approach handles the the empty free page list better.

最简单的避免单页刷新的方法是,只是不要这样做!等到清洁线程提供一些空闲页面供查询线程使用。这是我们在XtraDB 5.6中使用的 innodb_empty_free_list_algorithm 选项(默认为“backoff”)。这也存在于XtraDB 5.7中,并解决了缓冲池(LRU列表)互斥和双写缓冲单页刷新槽争夺的问题。这种方法可以更好地处理空的空闲页面列表。

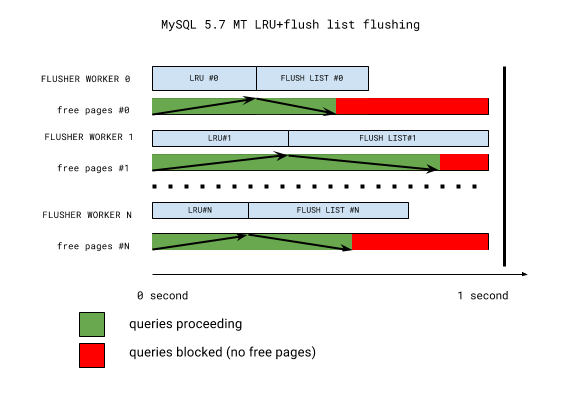

Even with this strategy it’s still a bad situation to be in, as it causes query stalls when page cleaner threads aren’t able to keep up with the free page demand. To understand why this happens, let’s look into a simplified scheme of InnoDB 5.7 multi-threaded LRU flushing:

即使使用这种策略,仍然是一个糟糕的情况,因为当页清洁线程不能跟上空闲页的需求,它会导致查询延迟。为了理解为什么会发生这种情况,我们来看一下InnoDB 5.7多线程LRU刷新的简化方案:

The key takeaway from the picture is that LRU batch flushing does not necessarily happen when it’s needed the most. All buffer pool instances have their LRU lists flushed first (for free pages), and flush lists flushed second (for checkpoint age and buffer pool dirty page percentage targets). If the flush list flush is in progress, LRU flushing will have to wait until the next iteration. Further, all flushing is synchronized once per second-long iteration by the coordinator thread waiting for everything to complete. This one second mark may well become a thirty or more second mark if one of the workers is stalled (with the telltale sign: “InnoDB: page_cleaner: 1000ms intended loop took 49812ms”) in the server error log. So if we have a very hot buffer pool instance, everything else will have to wait for it. And it’s long been known that buffer pool instances are not used uniformly (some are hotter and some are colder).

图中的关键是,LRU批量刷新不一定发生在最需要的时候。所有缓冲池实例首先刷新其本身的LRU列表(用于获得空闲页面),其次再刷新脏页列表(flush list)(用于checkpoint LSN和刷脏)。如果脏页列表正在刷新,LRU刷新将不得不等到下一次迭代。此外,所有刷新都由协调器线程每秒同步一次,等待一切完成。在服务器错误日志中,如果其中一个工作线程被停止(标示为“InnoDB:page_cleaner:预计1000ms的循环花费了49812ms”),这一秒可能会变成三十秒或者更多。所以如果我们有一个非常多热数据的缓冲池实例,其他一切都必须等待它。长此以往,缓冲池实例便不被统一使用(一些数据更热,一些数据更冷)。

A fix should:

– Decouple the “LRU list flushing” from “flush list flushing” so that the two can happen in parallel if needed.

– Recognize that different buffer pool instances require different amounts of flushing, and remove the synchronization between the instances.

修复方法应该是:

– 将“LRU列表刷新”从“脏页列表刷新”中解耦,以便两者可以在需要时并行发生。

– 认识到不同的缓冲池实例需要不同的刷新量,并删除实例之间的同步。

We developed a design based on the above criteria, where each buffer pool instance has its own private LRU flusher thread. That thread monitors the free list length of its instance, flushes, and sleeps until the next free list length check. The sleep time is adjusted depending on the free list length: thus a hot instance LRU flusher may not sleep at all in order to keep up with the demand, while a cold instance flusher might only wake up once per second for a short check.

我们基于上述标准开发了一个设计,其中每个缓冲池实例都有自己的私有LRU刷新线程。该线程监视其实例的空闲列表(free list)长度,刷新、休眠,直到下一个空闲列表长度的检查。休眠时间根据空闲列表长度进行调整:因此,热实例LRU刷新可能为了满足需求根本不休眠,而冷实例刷新操作可能每秒只能唤醒一次以进行短暂检查。

The LRU flushing scheme now looks as follows:

现在的LRU刷新方案如下所示:

This has been implemented in the Percona Server 5.7.10-3 RC release, and this design the simplified the code as well. LRU flushing heuristics are simple, and any LRU flushing is now removed from the legacy cleaner coordinator/worker threads – enabling more efficient flush list flushing as well. LRU flusher threads are the only threads that can flush a given buffer pool instance, enabling further simplification: for example, InnoDB recovery writer threads simply disappear.

这已经在Percona Server 5.7.10-3 RC版本中实现,并且这个设计也简化了代码。LRU刷新启发式是简单的,并且任何的LRU刷新,现在都从传统的清洁协调器/工作线程中删除,从而实现更有效的脏页列表刷新。LRU刷新线程是唯一可以刷新给定缓冲池实例的线程,可进一步简化:例如,让 InnoDB 用于恢复的写线程消失。

Are we done then? No. With the single-page flushes and single-page flush doublewrite bottleneck gone, we hit the doublewrite buffer again. We’ll cover that in the next post.

到这就结束了吗?不,单页刷新和单页刷新双写瓶颈的问题解决了,我们将再次瞄准双写缓冲区。这将在下一篇文章中介绍。

优化点比较细,值得思考